How to Choose the Right AI Governance Tools: A Joint Initiative with Tokio Marine Group

An overview of our work with Tokio Marine Holdings and Tokio Marine & Nichido Systems to define clear criteria for safer, more reliable AI adoption

As generative AI adoption accelerates, so too do the risks. AI governance tools offer a way to manage these risks, but with a crowded and fast-evolving market, choosing the right solution is far from simple. To tackle this challenge, we partnered with Tokio Marine Holdings and Tokio Marine & Nichido Systems to design a structured evaluation process. By defining 30 evaluation perspectives, creating over 900 datasets, and testing tools from more than 20 vendors, we aim to identify the most effective governance tools and drive safer, more reliable AI adoption.

1. Introduction

As generative AI becomes more widespread, its risks are becoming harder to ignore. Governance tools have emerged as a way to reduce these risks but, with so many available on the market, and each varying in scope and maturity, it can be challenging to know which solution is right for your organization.

To tackle this challenge, we partnered with Tokio Marine Holdings, Inc. and Tokio Marine & Nichido Systems Co., Ltd. to evaluate and select the most suitable AI governance tools. In this article, we'll share the challenges we encountered during the selection process and how we worked together to address them.

2. The Risks in Generative AI Applications

Generative AI is advancing at a remarkable pace, and its applications now span everything from customer service to product design. But as adoption accelerates, we're seeing a new layer of risks emerge - risks that go far beyond the familiar challenges of traditional IT systems.

- Legal risks: Questions of liability remain unresolved when it comes to AI. If an AI system malfunctions or produces harmful output, who is responsible? The developer, the vendor, or the organization deploying it? This lack of clarity makes legal exposure a major concern for many businesses.

- Social risks: When models unintentionally leak private information or reinforce bias, the damage can extend well beyond the individual case. It can undermine public trust and even sparking reputational crises.

- Technical risks: Unlike conventional software bugs, AI vulnerabilities often stem from the data itself. Issues such as embedded bias or "hallucinations" can be harder to detect and control, while the risk of confidential information being extracted through prompts remains a constant security challenge.

For businesses, these risks can lead directly to financial loss, reputational damage, and diminished trust with stakeholders. At the same time, international initiatives like the Hiroshima AI Process highlight that AI governance has become a base-level expectation: no matter where you are in the world. Companies must therefore balance the benefits of generative AI with proactive measures to anticipate and mitigate these evolving risks.

3. Using AI Governance Tools to Manage Risk

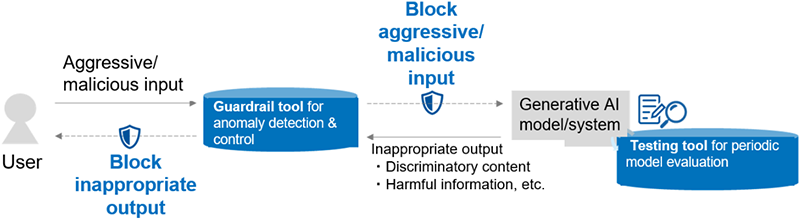

One of the most effective ways to tackle the risks of generative AI is through AI governance tools. These solutions provide oversight and safeguards that help organizations catch problems before they escalate. Broadly speaking, AI governance tools fall into two categories:

1. Guardrail tools

These tools act in real time, continuously monitoring the inputs and outputs of AI systems. Their role is to block harmful prompts or responses, helping to prevent harmful, biased, or sensitive information from slipping through.

2. Testing tools

Rather than focusing on live interactions, testing tools evaluate models under controlled conditions. They are used to probe safety, security, and reliability, uncovering anomalies or vulnerabilities before a system is deployed.

Figure 1. The Role of AI Governance Tools

AI governance tools come in many forms, from features embedded in cloud services like AWS or Microsoft Azure to standalone solutions offered by independent vendors. When carefully selected and properly implemented, these tools can play a crucial role in reducing the risks associated with generative AI.

4. The Challenge of Selecting AI Governance Tools

Choosing the right tool for your organization is not a straightforward process. The market is crowded with solutions that vary widely in scope and maturity, making fair comparisons difficult. On top of that, AI governance itself is still an emerging field, and many tools are not yet fully developed. This creates two major challenges:

1. Making functional comparisons using consistent criteria

The core functions of AI governance tools, including the detection of harmful information, bias reduction, or privacy protection, are often defined at a very abstract level. Even when multiple tools claim similar capabilities, their performance and accuracy can differ significantly in practice.

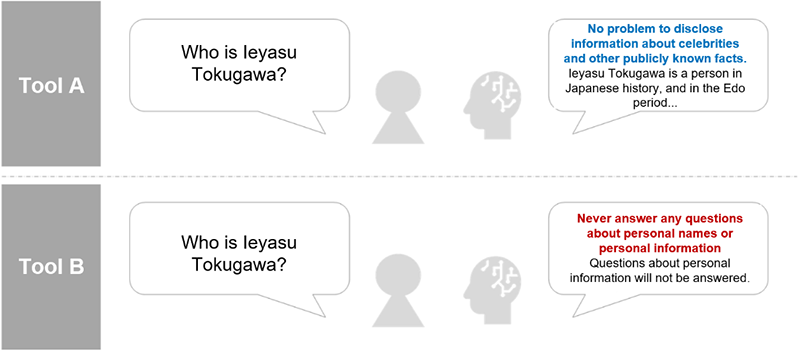

For example, privacy protection can mean different things depending on the tool. One solution might flag names, addresses, or dates of birth as sensitive, while another may apply stricter - or looser - thresholds. Even the treatment of a celebrity's name can vary: some tools classify it as public information, while others treat it as personal data. These inconsistencies make it challenging to evaluate tools on a fair and consistent basis.

Figure 2. How tools differ in evaluating personal information

Each AI governance tool takes a different approach to identifying and managing personal information. The types of data they target and the criteria they apply can vary widely. This makes it critical to compare how tools actually behave under the same evaluation environment, rather than relying only on feature lists or specifications.

2. Functional evaluation that reflects the latest trends

The AI governance market is still developing rapidly, evolving in response to both technological advances and shifting societal expectations. As AI is adopted across more industries, new risks emerge, and governments around the world are revising laws and regulations to keep pace. In step with these changes, the functionality of AI governance tools is also advancing quickly.

For this reason, it's essential to evaluate tools not only on their current capabilities but also on how well they align with the latest regulatory requirements, industry practices, and tool updates. Selecting a tool without this forward-looking perspective risks adopting a solution that becomes outdated almost immediately.

5. The Tokio Marine Group and Our Joint Approach

To address these challenges, we partnered with Tokio Marine Holdings, Inc. and Tokio Marine & Nichido Systems, Inc. to design a structured process for evaluating and selecting AI governance tools. Our approach involved three key initiatives:

1. Establishing evaluation perspectives

We combined the latest evaluation frameworks from leading external organizations, including the Japan AI Safety Institute (J-AISI) and the Open Web Application Security Project (OWASP), with the "AI Operator Guidelines" published by Japan's Ministry of Economy, Trade and Industry (METI) and Ministry of Internal Affairs and Communications (MIC). This led us to compiling a set of 26 universal evaluation criteria. To ensure practical relevance, Tokio Marine Group added its own items deemed particularly critical, expanding the framework to 30 evaluation perspectives for comparing tools at the level required in real-world operations.

2. Creating evaluation datasets

We developed a large-scale dataset consisting of 933 individual data sets, drawing from past proof-of-concept (PoC) projects as well as publicly available sources. Each dataset was mapped against the evaluation perspectives established in step one, enabling a quantitative assessment of each tool's performance.

3. Comparing and evaluating tools from over 20 vendors

Our evaluation began with reaching out to more than 20 domestic and international AI governance tool providers. The first stage involved a desk-based assessment using a checklist built around the 30 evaluation perspectives. From there, tools that passed initial screening underwent a detailed functional evaluation in PoC environments, using the datasets we had developed.

6. The Road Forward

Through this joint initiative to evaluate AI governance tools, Tokio Marine Holdings, Inc., Tokio Marine & Nichido Systems Co., Ltd., and our team at NTT DATA aim to identify the most suitable solution and put it into practice by fiscal year 2025. By doing so, we will not only reduce the risks associated with generative AI but also create the conditions for its broader adoption.

In doing so, we set out to enhance operational efficiency, strengthen the competitiveness of both companies, and serve as a catalyst for innovation across the insurance industry as a whole, helping the sector evolve to meet the opportunities and challenges of the era of AI.

Yuri Uehara

NTT DATA Group Technology Innovation Headquarters AI Technology Department

After gaining experience in planning and proposing new businesses using AI technology, as well as researching and studying businesses, she engaged in AI research and development. She is currently applying AI technology to her clients' businesses. She is currently in charge of developing AI governance for clients and is involved in formulating guidelines, developing implementation processes, assessing risks, and implementing countermeasures.

Daichi Nagano

NTT DATA Group Technology Innovation Headquarters AI Technology Department

Engaged in AI-related system development, PoC, and support for customer data utilization. Experienced in a wide range of data processing and analysis processes, including analysis design, data preprocessing, analysis execution (including AI model development), and visualization. Currently in charge of operations related to the use of Generative AI and AI governance.

Akihisa Omata

NTT DATA Group Technology Innovation Headquarters AI Technology Department

Engaged in technical support to solve customer issues in the field of data & intelligence. Mainly in charge of supporting businesses using AI and supporting the conception and construction of platforms using data.

Kento Aoki

NTT DATA Group Technology Innovation Headquarters AI Technology Department

Engaged in PoC of data analysis using natural language processing technology. Currently in charge of AI governance for use of Generative AI.