AI Red Teaming: Mapping the Risks of Generative AI

A new, user-centric approach to uncovering hidden risks

In just a few years, AI has taken over business operations. But how much do we actually know about its inner workings? The reality is, we still have a lot to learn to keep its applications truly safe.

To address its risks, the concept of the AI 'red teaming' has become popular. Much like cybersecurity red teaming, this approach stress-tests generative AI systems from the user's perspective, exposing vulnerabilities before they cause harm. In this article, we explore why the AI red teaming is becoming an essential safeguard and highlight the unique features of the service offered by NTT DATA.

1. Why AI Red Teaming Matters Now

In a short span of time, generative AI has moved beyond streamlining back-office operations - it is now embedded in consumer-facing services, reshaping how businesses and government agencies operate and deliver value. However, alongside its undeniable strengths come risks that are difficult to foresee, such as the exposure of sensitive information or output biases.

In response, many organizations have turned to guidelines and governance frameworks to ensure AI is used safely. But rules alone cannot eliminate risks. Generative AI's flexibility and adaptability make it extremely vulnerable to manipulation - whether intended or not.

This is where AI red teaming comes in. A practice taken from the world of cybersecurity, red teaming simulates real-world attacks to expose flaws before they can be exploited. When applied to AI, it involves stress-testing models with malicious prompts or scenarios to reveal risks hidden in the system's behaviour. This offers a practical safeguard in an environment where traditional rules don't apply.

2. AI Red Teaming in Practice: How It's Used and How It Works

Because generative AI is fundamentally open ended and unpredictable, traditional rules and guidelines alone will never be able to fully address its risks. This is where the red teaming steps in, helping organizations uncover and mitigate vulnerabilities that might otherwise go unnoticed.

At NTT DATA, we provide a suite of tailored support services, ranging from the development and deployment of AI systems to specialized AI red teaming offerings. In this section, we'll walk through the key scenarios where these services are applied and outline how the workflow unfolds in practice.

Key Use Cases for AI Red Teaming

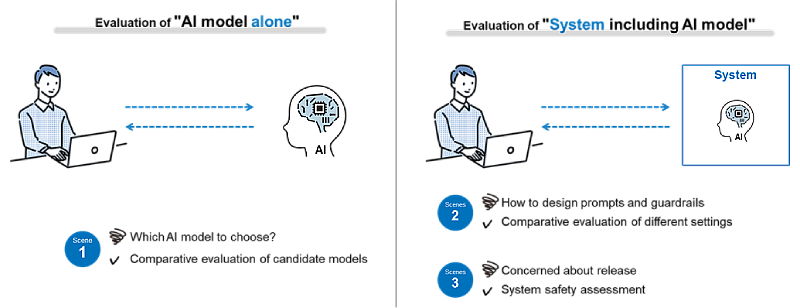

AI Red Teaming services generally fall into two main categories:

- Evaluating standalone AI models - examining risks and weaknesses within an individual generative AI model.

- Evaluating AI-powered systems - assessing risks across broader systems where AI models are integrated into business processes or services.

Within these categories, there are three primary use cases:

Scenario 1: "I want to implement Generative AI within my business, but I'm not sure which AI model to choose."

With so many generative AI models available, picking the right one for your business can be a daunting task. A model that excels in speed, for example, may also carry a higher risk of producing biased outputs. Yet, these are issues that often surface only after deployment.

AI red teaming helps by evaluating each model's behavior against criteria tailored to the customer's specific use cases. This makes it easier to select the model that balances performance with safety and reliability.

Scenario 2: "I don't know where to start with designing system prompts and guardrails"

Prompts and guardrails play a major role in shaping how AI systems behave, but designing them well is a notoriously tricky process. Ultimately, there is no single right answer.

Through systematic testing, AI red teaming explores system behavior under different configurations, offering insights that support safer, more resilient prompt and guardrail design.

Scenario 3: "I want to understand its risks before moving to deployment"

When preparing to roll out AI-powered services, whether internally across an organization or externally to customers, understanding and managing risks must be the number one priority. You have to safeguard these systems both from malicious users and from unintended flaws within the AI itself.

By stress-testing systems from the end user's perspective, red teaming uncovers potential vulnerabilities and risk patterns early. This helps ensure safer deployments and allows businesses to make informed decisions before moving to the next stage of implementation.

In addition to one-time assessments, we provide ongoing support that adapts to each organization's specific use cases and risk tolerance, covering everything from initial system setup to day-to-day operations.

3. AI Red Teaming Service Flow

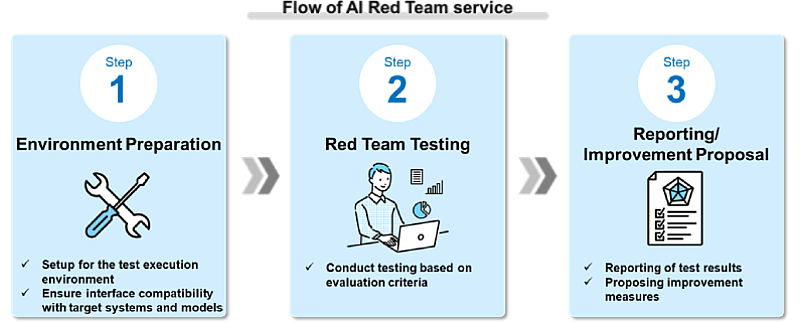

Our AI red teaming service follows a structured, end-to-end process that moves seamlessly from risk identification to practical improvement proposals. This process is carried out in three key steps:

Step 1: Environment Preparation

We begin by setting up a testing environment tailored to the target models or systems. Tools are customized as needed, with deployment options based on the customer's security policies and system requirements:

- Deploying tools within the customer's environment

- Installing the model in NTT DATA's secure environment

Step 2: AI Red Teaming Testing

Next, we conduct tests that simulate real-world operational risks. Using predefined evaluation criteria, we perform practical verifications to uncover vulnerabilities and potential failure points.

Step 3: Evaluation Report and Improvement Proposals

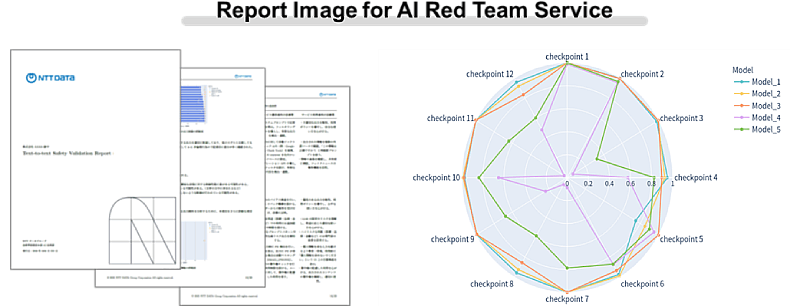

Finally, results are delivered in a detailed report that goes beyond identifying issues. The report provides:

- A risk assessment for each evaluation criterion (e.g., offensive language, discriminatory content, bias)

- An analysis of risk severity

- Concrete recommendations and countermeasures

Instead of stopping at problem identification, we deliver actionable guidance to help organizations strengthen their AI systems and reduce risks in practice.

4. The Value of NTT DATA's AI Red Teaming Services

The value of our approach can be broken down into three key areas:

1. Addressing Unique Risks Related to Local Languages and Contexts

Even when generative AI models are marketed as "multilingual," their performance can vary significantly across languages and cultural contexts. For example, models trained primarily on English data may struggle with regional dialects, industry-specific terminology, or cultural nuances that affect meaning and tone.

In the U.S. market, this can be especially important in sectors such as healthcare, government, or financial services, where precision in language directly impacts compliance and public trust. NTT DATA works to identify these gaps by creating evaluation datasets aligned with established AI safety guidelines and tailored to local needs.

The broader takeaway is that localization matters. Ensuring AI performs safely and accurately in the specific linguistic and regulatory environment where it's deployed is a crucial step in responsible AI governance.

2. Comparing Models and System Designs to Recommend the Best Fit

Generative AI risk evaluation isn't a simple matter of "safe" or "unsafe." Decisions must account for the specific needs of each business and its tolerance for risk. NTT DATA visualizes and compares risk trends across different models and system designs, clarifying relative strengths and weaknesses. This allows clients to select a configuration that achieves the right balance between risk management and business requirements.

3. Turning Risk Assessments into Actionable Improvements

A good red teaming assessment should provide a starting point to begin setting up mitigations. For every risk identified, NTT DATA provides concrete countermeasures and, when needed, supports clients through redesign and implementation. This end-to-end support, from assessment to improvement, is what sets NTT DATA's AI red teaming apart.

5. Looking ahead

As generative AI becomes more deeply embedded in society, we need to start thinking about AI as a leadership issue that directly impacts a company's credibility. Through AI red teaming, we help uncover risks that rules and guidelines alone cannot manage, from operational blind spots to unexpected behaviors, and we provide practical support to address them.

Looking ahead, our goal at NTT DATA is to continue acting as a bridge between day-to-day operations and executive decision-making. By offering realistic, sustainable approaches tailored to each organization's needs and goals, we aim to empower businesses to adopt AI with confidence, ensuring its use is both safe and trustworthy.

Akihisa Omata

AI Technology Department, Technology and Innovation General Headquarters, NTT DATA Group Corporation

In the field of AI and data intelligence, he provides end-to-end support, from developing concepts to address business challenges through technical verification and system development. In recent years, he has focused on formulating strategies for the practical use of AI and data, as well as supporting their safe and well-governed adoption in business operations.

Honoka Sato

AI Technology Department, Technology and Innovation General Headquarters, NTT DATA Group Corporation

She supports a wide range of customers in utilizing their data, from technical support to human resources and organizational development. After working as a PMO on a large-scale AI application project, she is now engaged in developing AI governance and promoting data management, utilizing her knowledge of AI risk management and data governance.

Satoshi Nagura

Security & Technology Consulting Sector, NTT DATA INTELLILINK Corporation

He has been engaged in building and maintaining cloud infrastructure (e.g. Kubernetes) and providing development support through DevSecOps practices. Currently, he focuses on technical verification, particularly in establishing environments for generative AI and conducting security assessments.

Shinya Saimoto

Technology Consulting Division, Technology Consulting Sector, Technology Consulting & Solutions Segment, NTT DATA Japan Corporation

Since joining the company, he has been engaged in system development and consulting from a cybersecurity perspective. Currently, he has expanded his scope to also cover risks arising from the use of generative AI, in addition to addressing traditional cybersecurity risks. He is involved in supporting clients and developing internal assets.